For the past seven years or so, the best gaming monitors have enjoyed something of a renaissance. Before Adaptive-Sync technology appeared in the form of Nvidia G-Sync and AMD FreeSync, the only thing performance-seeking gamers could hope for was a refresh rate above 60 Hz. Today, not only do we have monitors routinely operating at 144 Hz and some going even further, Nvidia and AMD have both been updating their respective technologies. In this new age of gaming displays, which Adaptive-Sync technology reigns supreme, G-Sync or FreeSync?

For the uninitiated, Adaptive-Sync means that the monitor’s refresh cycle is synced with the rate at which the connected PC’s graphics card renders each frame of video, even if that rate changes. Games render each frame individually, and the rate can vary widely depending on the processing power of your PC’s graphics card. When a monitor’s refresh rate is fixed, it’s possible for the monitor to begin drawing a new frame before the current one has completed rendering. G-Sync, which works with Nvidia-based GPUs, and FreeSync, which works with AMD cards, solves that problem. The monitor draws every frame completely before the video card sends the next one, thereby eliminating any tearing artifacts.

Today, you’ll find dozens of monitors, even non-gaming ones, boasting some flavor of G-Sync, FreeSync or even both. If you haven’t committed to a graphics card technology yet or have the option to use either, you might be wondering which one is best. And if you have the option of using either, will one offer a greater gaming advantage over the other?

Fundamentally, G-Sync and FreeSync are the same. They both sync the monitor to the graphics card and let that component control the refresh rate on a continuously variable basis.

Can the user see a difference between the two? In our experience, there is no visual difference when frame rates are the same.

We did a blind test in 2015 and found that when all other parameters are equal between two monitors, G-Sync had a slight edge over the still-new-at-the-time FreeSync. But a lot has happened since then. Our monitor reviews have highlighted a few things that can add or subtract from the gaming experience that have little to nothing to do with refresh rates and Adaptive-Sync technologies.

The HDR quality is also subjective at this time, although G-Sync Ultimate claims better HDR due to its dynamic tone mapping.

It then comes down to the feature set of the rival technologies. What does all this mean? Let’s take a look.

G-Sync Features

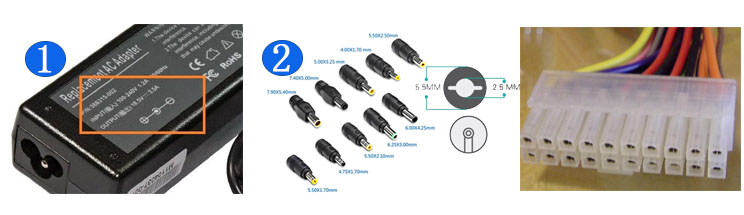

G-Sync monitors typically carry a price premium because they contain the extra hardware needed to support Nvidia’s version of adaptive refresh. When G-Sync was new (Nvidia introduced it in 2013), it would cost you about $200 extra to purchase the G-Sync version of a display, all other features and specs being the same. Today, the gap is closer to $100.

There are a few guarantees you get with G-Sync monitors that aren’t always available in their FreeSync counterparts. One is blur-reduction (ULMB) in the form of a backlight strobe. ULMB is Nvidia’s name for this feature; some FreeSync monitors also have it under a different name, but all G-Sync and G-Sync Ultimate (not G-Sync Compatible) monitors have it. While this works in place of Adaptive-Sync, some users prefer it, perceiving it to have lower input lag. We haven’t been able to substantiate this in testing. Of course, when you run at 100 frames per second (fps) or higher, blur is a non-issue, and input lag is super-low, so you might as well keep things tight with G-Sync engaged.

G-Sync also guarantees that you will never see a frame tear even at the lowest refresh rates. Below 30 Hz, G-Sync monitors double the frame renders (and thereby doubling the refresh rate) to keep them running in the adaptive refresh range.

FreeSync Features

FreeSync has a price advantage over G-Sync because it uses an open-source standard created by VESA, Adaptive-Sync, which is also part of VESA’s DisplayPort spec.

Any DisplayPort interface version 1.2a or higher can support adaptive refresh rates. While a manufacturer may choose not to implement it, the hardware is there already, hence, there’s no additional production cost for the maker to implement FreeSync. FreeSync can also work with HDMI 1.4.

Because of its open nature, FreeSync implementation varies widely between monitors. Budget displays will get FreeSync, at a 60 Hz or greater refresh rate and little else. You won’t get blur-reduction, and the lower limit of the Adaptive-Sync range might be just 48 Hz, compared to G-Sync’s 30 Hz.

But FreeSync Adaptive-Sync works just as well as any G-Sync monitor. Pricier FreeSync monitors add blur reduction and Low Framerate Compensation (LFC) to compete better against their G-Sync counterparts.

G-Sync vs. FreeSync: Which Is Better for HDR?

To add even more choices to a potentially confusing market, AMD and Nvidia have upped the game with new versions of their Adaptive-Sync technologies. This is justified, rightly so, by some important additions to display tech, namely HDR and extended color.

On the Nvidia side, a monitor can support G-Sync with HDR and extended color without earning the “Ultimate” certification. Nvidia assigns that moniker to specific monitors that include 1,000 nits peak brightness, (which all the currently certified monitors achieve via the desirable full-array local dimming (FALD) backlight technology). There are many displays that are plain G-Sync (sans Ultimate) with HDR and extended color.

A monitor must support HDR and extended color for it to list FreeSync Premium on its specs sheet. If you’re wondering about FreeSync 2, AMD has supplanted that with FreeSync Premium. Functionally, they are the same.

Here’s another fact: If you have an HDR monitor that supports FreeSync with HDR, there’s a good chance it will also support G-Sync with HDR (and without HDR too). We’ve reviewed a number of these. We’ll provide a list at the end of this article with links to the reviews.

And what of FreeSync Premium Pro? It’s the same situation as G-Sync Ultimate in that it doesn’t offer anything new to core Adaptive-Sync tech. It simply means AMD has certified that monitor to provide a premium experience with at least a 120 Hz refresh rate, LFC and HDR. There is no brightness requirement nor is a FALD backlight part of the spec. Many FreeSync panels will qualify for Premium Pro status simply by supporting HDR and extended color.

Running G-Sync on a FreeSync Monitor

We’ve covered this subject in multiple monitor reviews and in this article on how to run G-Sync on a FreeSync monitor. It’s pretty simple, actually.

First, you make sure you have the latest Nvidia drivers installed (anything dated after January of 2019 will work) and hook up a FreeSync monitor. Chances are that the FreeSync monitor will run G-Sync with your Nvidia graphics card — even if Nvidia hasn’t officially certified the monitor as G-Sync Compatible. And if the monitor supports HDR, that will likely work with G-Sync too.

A visit to Nvidia’s website reveals a list of monitors that have been certified to run G-Sync. Purchasing an official G-Sync Compatible monitor guarantees you’ll be able to use our instructions linked above.

FreeSync Monitors We’ve Tested That Support G-Sync

The monitors in the list below were all tested by Tom’s Hardware on systems with both Nvidia and AMD graphics cards, and all of them supported both G-Sync and FreeSync without issue. This includes monitors that Nvidia hasn’t officially certified as being G-Sync Compatible and, therefore, aren’t on Nvidia’s list. Additionally, the ones with HDR and extended color delivered Adaptive-Sync in games that support those features.

Acer Nitro XV273K

Acer XFA240

Alienware AW5520QF

AOC Agon AG493UCX

Dell S3220DGF

Gigabyte Aorus CV27F

Gigabyte Aorus CV27Q

Gigabyte Aorus FI27Q

Gigabyte Aorus KD25

HP Omen X 25f

Monoprice Zero-G 35

MSI Optix G27C4

MSI Optix MAG271CQR

Pixio PXC273

Razor Raptor 27

Samsung 27-Inch CRG5

ViewSonic Elite XG240R

ViewSonic Elite XG350R-C

Conclusion

So which is better: G-Sync or FreeSync? Well, with the features being so similar there is no reason to select a particular monitor just because it runs one over the other. Since both technologies produce the same result, that contest is a wash at this point.

Instead, those shopping for a PC monitor have to decide which additional features are important to them. How high should the refresh rate be? How much resolution can your graphics card handle? Is high brightness important? Do you want HDR and extended color? If you’re an HDR user, can you afford a FALD backlight?

It’s the combination of these elements that impacts the gaming experience, not simply which adaptive sync technology is in use. Ultimately, the more you spend, the better gaming monitor you’ll get. These days, when it comes to displays, you do get what you pay for. But you don’t have pay thousands to get a good, smooth gaming experience.